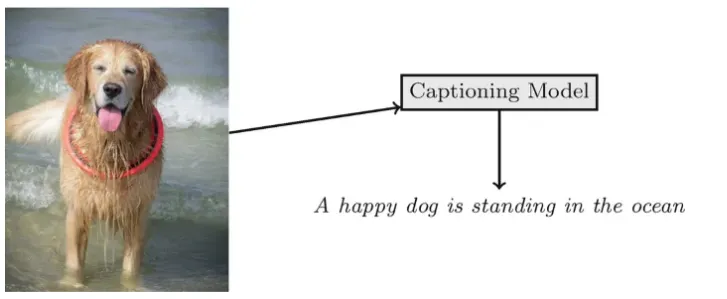

Image and Video Captioning

Technologies Used

Project Overview

I worked on this project during my participation in the Artificial Intelligence and Multi-Agent Systems Summer School at the AI-MAS Laboratory, University Politehnica of Bucharest. The focus was on improving the existing model for image and video captioning, through curriculum learning.

Key Features

- Exploring the use of different learning strategies that use curriculum learning

- Increasing difficulty through: caption length, syntactic structure, vocabulary difficulty

- Evaluating comprehensively on standard datasets (Flickr8k, MSVD)

Results

The implemented models achieved competitive performance on standard benchmarks, with particular improvements in BLEU scores on the Flickr8k dataset. However, as a result of training on simpler data for longer, the predicted captions were more generic and less descriptive.

Learning Outcomes

This project provided valuable hands-on experience with cutting-edge deep learning research, combining computer vision and natural language processing. It enhanced my understanding of both fields.